What Are Vector Embeddings? A Beginner's Guide to How LLMs Understand Language

Key Points

What is the purpose of vector embeddings in the context of AI and language processing?

Vector embeddings convert non-numeric data like words or sentences into numerical representations, allowing AI models to understand and process human language in a meaningful way.

How do embeddings help computers understand relationships between words?

Embeddings capture context and meaning in numerical form, enabling computers to identify that words with similar meanings or usages have similar vector representations in the embedding space.

Where do vector embeddings typically come from?

Embeddings are generated by neural networks trained on large datasets, using models like Word2Vec, GloVe, BERT, and GPT. These models learn word relationships and context to create accurate embeddings.

What is cosine similarity and how is it used to measure the similarity of embeddings?

Cosine similarity measures the angle between two vectors. It is used to determine how similar two embeddings are, with values ranging from 1 (perfect match) to -1 (opposite meaning), helping in applications like semantic search.

How can you practically utilize embeddings in real-world applications?

Embeddings can be used for semantic search, text clustering, recommendation systems, and improving models with few-shot learning. Tools like OpenAI APIs and vector databases make it easy to implement embeddings in various projects.

What Are Vector Embeddings? A Beginner’s Guide to How LLMs Understand Language

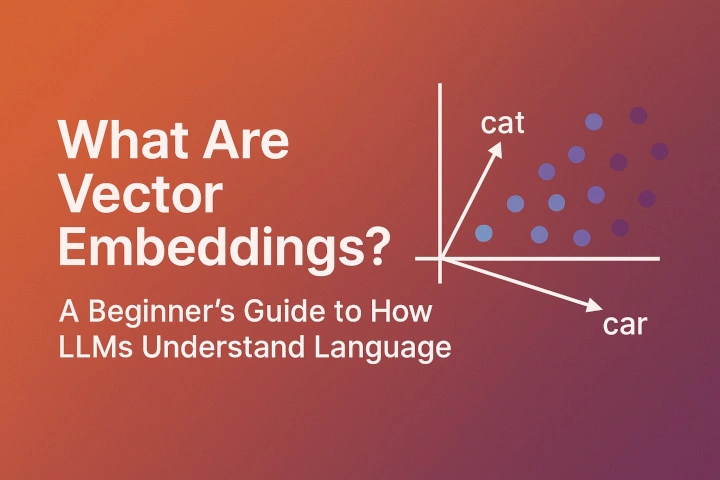

Imagine you’re trying to teach a computer the difference between the words cat and car. To us humans, it’s easy — one is a furry animal, the other is a vehicle. But how do you explain that to a machine that only understands numbers?

That’s where vector embeddings come in.

If you’re new to the world of AI, NLP (natural language processing), or large language models (LLMs) like GPT-4, vector embeddings are one of those foundational concepts that might sound intimidating — but they’re actually quite intuitive once you grasp the core idea.

In this post, I’ll break down what vector embeddings are, why they matter, and how they power some of the most impressive AI systems in the world today.

What Is a Vector Embedding?

At its core, a vector embedding is just a way of turning something — like a word, sentence, image, or even a product — into a list of numbers.

More specifically:

- It’s a numerical representation of something non-numeric.

- It’s usually a list (or array) of floating point numbers.

- The goal is to capture meaning, context, or relationships in that numeric form.

For example:

"cat" → [0.12, -0.45, 0.67, ..., 0.03] (a 768-dimensional vector)In the same space:

"dog" → [0.15, -0.48, 0.70, ..., 0.01]

"car" → [-0.22, 0.34, -0.56, ..., 0.90]The beauty of this representation is that words with similar meanings or usage tend to have similar vectors.

Why Not Just Use Words Directly?

Computers can’t understand text the way we do. To a computer, the word “cat” is just a string of characters. That’s not helpful for making decisions or predictions.

What if you want your model to:

- Predict the next word in a sentence?

- Find the most similar sentence in a database?

- Cluster topics from thousands of documents?

You can’t do that efficiently with raw text. You need a format that machines can work with — numerical vectors that preserve meaning.

That’s the job of an embedding.

Where Do Embeddings Come From?

Embeddings are typically generated by neural networks, trained on massive amounts of data.

A few common sources:

- Word2Vec, GloVe: Early models trained to understand word relationships (e.g. king - man + woman = queen).

- BERT, GPT: More modern transformer-based models that generate contextual embeddings — the same word can have different embeddings depending on how it’s used.

- OpenAI Embeddings API: Gives you pre-trained embeddings for any text you input, using the same models that power GPT.

These models “learn” which words appear near each other and in what contexts, then encode that into the embedding space.

How Do LLMs Use Embeddings?

Large language models like GPT use embeddings at nearly every stage:

-

Input Encoding: When you type a prompt into ChatGPT, your text is tokenized and turned into embeddings. These embeddings carry the semantic meaning of your words into the model.

-

Internal Computation: Throughout the model’s layers, embeddings are transformed, mixed, and evolved to generate a deep understanding of your input.

-

Output Prediction: The model generates new embeddings, which are then decoded back into human-readable text — your response.

So in a sense, embeddings are the language that LLMs use to think.

What Can You Do With Embeddings?

Once you have an embedding, you can use it in tons of practical ways:

1. Semantic Search

You can compare the embedding of a query against embeddings of documents to find the most relevant matches — even if the exact words don’t overlap.

Example: Searching “how to build a REST API” might surface a tutorial titled “Creating web backends with Flask” — because the embeddings are similar, even though the words are different.

2. Text Clustering & Classification

Group similar content together, detect topics, or classify intent/sentiment — all by operating in embedding space.

3. Recommendation Systems

Suggest products, videos, or articles by finding items with similar embeddings to ones the user has engaged with.

4. Few-shot Prompting & Retrieval-Augmented Generation (RAG)

Find the most relevant examples or facts from a knowledge base to “feed” into an LLM prompt — boosting accuracy and reducing hallucinations.

How Is Similarity Measured?

The most common way to compare embeddings is with cosine similarity — it looks at the angle between two vectors, rather than their exact values.

A cosine similarity of:

- 1.0 = perfect match

- 0.0 = completely unrelated

- -1.0 = opposite meaning (rare in practice)

In practice, embeddings that are “closer” together in vector space are assumed to be more semantically similar.

Example: Comparing Sentences with Embeddings

Let’s say we have two sentences:

- “How do I make pancakes?”

- “What’s the recipe for pancakes?”

Even though the wording is different, the meaning is very close. Their embeddings will likely be very similar.

Now compare with:

- “How do I get to the airport?”

Totally different meaning — that embedding will be farther away in space.

This ability to generalize meaning across different wordings is what makes embeddings so powerful for real-world applications.

How Can You Try Embeddings Yourself?

You don’t have to train your own neural network — you can use APIs and tools to experiment today:

- OpenAI Embeddings API: Send a string of text, get a 1536-dimensional vector back.

- Cohere, Hugging Face Transformers, SentenceTransformers: Open-source models for generating embeddings.

- Vector DBs: Tools like Pinecone, Weaviate, and FAISS are designed to store and search embeddings at scale.

- Visualizers: Use PCA or t-SNE to reduce dimensions and explore how embeddings cluster visually.

Conclusion

Vector embeddings are like the secret sauce behind many of the magical things AI can do with language.

They turn messy, unpredictable human language into clean, structured representations that models can understand, reason over, and generate from. Whether you’re building a search engine, chatbot, or classification tool — embeddings are likely involved under the hood.

If you’re just getting started with AI or LLMs, getting comfortable with embeddings is one of the smartest moves you can make. Once you see how they work, a whole world of new possibilities opens up.